so it should be no secret we use “this person doesnt exist” (TPDNE) as a reference model. To be honest I have an Asian bias when I draw - I’m trained on East and South East Asian and Caucasian Faces growing up and college. AI images that randomize the ethnicity helps overcome the bias. Of course it has to be transformative, and if You’ve tried to use TPDNE pictures then you will find the face as not enough. In scifi when there are a lot of embedded details that are important - seeing the character with more context. So that now that we finished with the first part of 2-Parsec while I do some house keeping (getting all the pictures arranged to make it easy for the GM to upload or print the portraits and use the maps), Nicco is researching and developing our face workflow.

Why hand draw 3d Reference models when we can just use AI art all the way?

I cant use AI given all the legal hurdles and the ethics involved. Its just me and Nicco, I know others can and are using it like cyborg prime and john wats but we’re honestly unable to navigate it. I know there are “ethically sourced” Ai, Nicco and I can possibly make the data training set if we had 2,000usd lying around to set up stable diffiusion with 24gb of GPU-ram 😅 (sarcasm) and then it would be completely ethical. Like one of the data training sets i saw is by ArtGerm - someone i used to follow alot in Deviant Art. I see his style used a lot.

Its not bad, we plan to make our own Character generator with blender and Godot over time. Using 3d Models in blender, posing them, and creating the 3d assets is part of our prep. Long term we want a soft ghibli color palette for a scifi with the some diesel and steam punk hardware of humans that have spent 500 years in space - the span of many human empires - and beings (AI) with personalities who were developed 500 years ago.

Right now the Quality of the Art is all over the place. As a part time thing, stabalizing the process is where my QMS and Classically Trained Art Background comes in. The Human is there in the process to address what our tools cannot do - create a portrait of a character with enough context that when a Player sees it they can spot details as part of the story. A medium shot (upper torso and arms) with a background context (using our ship and space station modular model packages), and enough details about the face for the Player to differentiate. We hope to have 30-60 minutes of work per character and to be able to produce up to 10 per week if needed, but typically I can churn it out at a rate of 3 per week and nicco can churn probably 3x as much.

Progress so far:

1) found a free face modeling tool, that just takes a 2d picture and shapes a face into it.

2) we are learning to use MB-Lab and Riggify to rig our space suits and model bodies later on.

Goals or Target Outputs of this project:

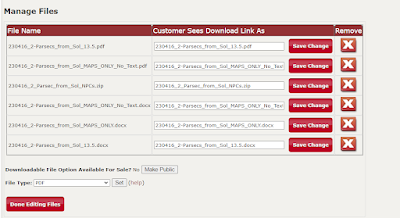

Workflow: from TPDNE + Outfit (Nice to have)+ BG (Nice to have) to portrait and saving the file (if possible give it as part of the package so that GMs. We can have better perspectives of the character.

1) Be able to draw the characters in more than a Straight-on Face perspective. Head position to convey additional context.

2) more consistency in quality and a more predictable workflow.

3) When nicco and I design more Suits: vacc (intra or extra vehicular activity), hazarodous environmental, and combat dress we can easily incorporate it to the characters.

4) work with close poirtrait but move towards full body (hi res) that can be used as 2d Tokens or cropped for just portrait tokens.

190mm focal length according to the App. (Keentools face tool)

210mm focal length camera shot. Simple Glasses model to get reference how the shadows will look.

35mm focal length